The first way

sudo apt-get clean

to clear the cache file

sudo apt-get autoclean

to clear the cache file automatically

sudo apt-get autoremove

To clean file / dependencies that are not needed from the system.

The second way

with Bleachbit

sudo apt-get install bleachbit

Home users vs Servers

There are two types of Linux users:

- Home Users – i.e. Desktop

- Servers – i.e. WebServers

For Home users, duh! do whatever you want … you can just do much damage. You clean cache, and the system will be slowed for about 3 seconds and then re-populate memory with necessary files. You can safely runto cleanup maximum memory:

For Servers, (i.e. WebServer, A VPS with Low memory), it is much safer to use , cleaning only.

Why? cause fills up massive part of your servers memory and in case of a Apache webserver, 50% of memory is used for .

Example scenarios

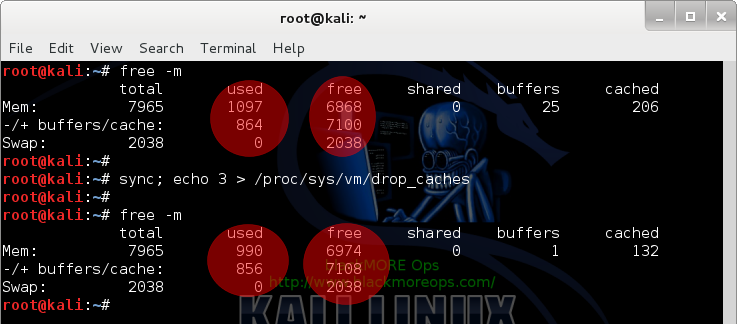

You need to run and to see the differences:

My Desktop

Run the following command to see how much memory is being committed:

Then run

As you can see, my desktop was doing next to nothing, so there’s not much to cleanup.

My server

But because my server is always busy and it’s got a small memory pool (for a server), running makes a massive difference:

How awesome is that? In an instant I recovered a massive pool of memory and my terminal to the server became lot more responsive.

Use cron to drop caches regularly

Its a good idea to schedule following in to automatically cache on regular interval.

Option 1: drop_caches using cron

Edit crontab file and add the following line in red at the end of it.

The command

will execute every minute of 4am every daywill execute at 4:00am every day.

Option 2: drop_caches using sysctl

Alternative you can use also sysctl to change the drop_caches value:

Option 3: drop_caches using a bash script

Create a shell script say under partition and enter following contents

Now, set the permission of script to

Now edit file

Enter following line to set for clearing cache everyday at 4am

Restart crond service

Restart service for each options (you only need to choose any of the options above)

Performance gain and supporting graphs

There seems to be lot of posts and questions around whether this method should be used or not. Here’s my scenario/explanation:

I’ve struggled months with Linux Kernel panic issue: hung_task_timeout_secs and blocked for more than 120 seconds and tweaking and allowed my VPS to function normally for few months. Then traffic increased again (and sudden bursts of traffic from twitterand reddit) and I couldn’t afford to increase RAM anymore. This kernel hack (be that may outside of recommended usage) allowed me to use cron and Monit to

- Clear pagecache,

- Free up enough memory to restart HTTPD automagically.

without having to do a cold restart. I am not claiming this a perfect solution but if used with ‘‘ and if database optimization is enabled, it is yet to fail me.

Let me put it this way, it is also against recommendation to send a crafted fragmented packet to a server causing an outage, but we’ve all tested our server’s against such attacks.

I don’t think a big organization needs such a hack as for them it’s just easier to upgrade RAM, but for the little guys and VPS owners, this could mean the difference between 90% and 99.9% uptime. (I’ve got 99.7% uptime but that’s my fault, I keep testing in Prod server).

Here’s the RAW logs from today:

and Monit logs

and lastly here’s sar logs

As you can see, PageCache was cleaned 4 times overnight, service was restarted only once when Memory reached 97%. I had a massive spike of traffic (200%) at 3am. Normally, most servers would get stuck at and become unresponsive when memory is 97% or +, refer to the Kernel panic link above. This whole time, sar was just cruising along thinking everything just fine (cause it’s set to every 10minutes to save resources) … We use 8GB+ RAM minimum at work for just reverse proxies, but a VPS is no match for that as it’s doing everything from DNS, DB, Webserver, syslog, sshd, cPanel, backup etc.

0 comments:

Post a Comment